Syllabus

Unit I

Introduction to Computer: Definition, Computer Hardware & Computer Software

Components: Hardware – Introduction, Input devices, Output devices, Central Processing

Unit, Memory- Primary and Secondary. Software - Introduction, Types – System

and Application.

Computer Languages: Introduction, Concept of Compiler, Interpreter

&Assembler

Problem solving concept: Algorithms – Introduction, Definition,

Characteristics,

Limitations, Conditions in pseudo-code, Loops in pseudo code.

Unit II

Operating system:

Definition, Functions, Types, Classification, Elements of command based and GUI

based operating system.

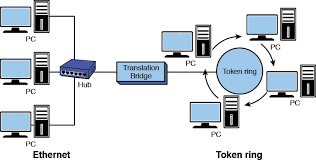

Computer Network: Overview,

Types (LAN, WAN and MAN), Data

Communication, topologies.

Unit III

Internet : Overview, Architecture, Functioning,

Basic services like WWW, FTP,Telnet, Gopher etc., Search engines, E-mail, Web

Browsers.

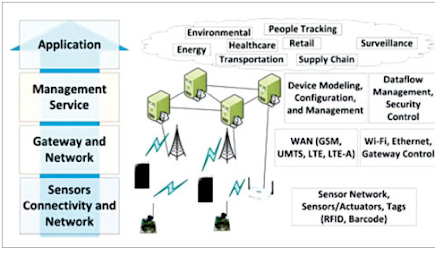

Internet of Things (IoT): Definition, Sensors,

their types and features, Smart

Cities, Industrial Internet of Things.

Unit IV

Block chain: Introduction, overview, features,

limitations and application areas ,fundamentals of Block Chain.

Crypto currencies: Introduction , Applications

and use cases

Cloud Computing: It nature and benefits, AWS,

Google, Microsoft & IBM Services

Unit V

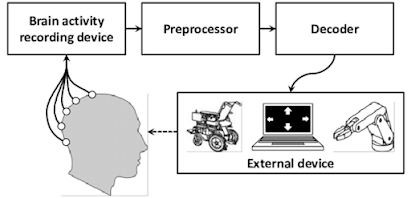

Emerging Technologies: Introduction, overview, features, limitations and application

areas of Augmented Reality, Virtual Reality, Grid computing, Green computing,

Big data analytics, Quantum Computing and Brain Computer Interface

UNIT I

----------

COMPUTER: AN INTRODUCTION

Definition: we can define a

computer in different perspectives like a Computer is a machine, a computer is

an electronic device, a Computer is a data processor, and a computer is a

digital device. Etc. but in a much-summarized way…

A computer is a digital device that takes data process it under some

specific program and produces some meaningful output.

Mainly computer is a digital data problem solver or action performer. It

takes data to process it on the basis of some previously loaded program (set of

instructions) and produces the desired result. A computer can’t do anything

that is not pre-loaded into it.

Like many other machines, a computer is also a system. Any system has

three main components input-process-output. As our human body, we listen from

our ear process it under our brain and when we find some result we can show the

output with the help of mouth (speak) or hand (writing). No system can work

independently. Like many other systems, computers are also a system, it takes

command, instructions, raw data, etc. with the help of specified input devices

and then processes it with a special device that is called a processor (processor

work on preloaded program) and then produces a result. It can be understood

with an example suppose that you want to find the result of 15 * 5 to do this

you can take the help of a computer. The computer takes input (15 * 5) then the

processor processes it on the basis of a table program that is preloaded and

gets the result i.e. 75. But how could you know the result so there are some

output devices which are used to show the result that is produced by the

processor? In very short we can say that a computer is a system for data

processing.

Characteristics of a computer

Speed The speed of computers is very fast. It

can perform in a few seconds the amount of work that human beings can do in an

hour. When we are talking about the speed of a computer we do not talk in terms

of seconds or even milliseconds it is nanoseconds. The computer can perform

about 3 to 4 million arithmetic operations per second. The unit of speed of the

computer is Hertz. A normal desktop computer processor speed is between 3.50 to

4.2 GHz.

Accuracy accuracy of computer is very high or in

other word we can say that if program design is very good Computer cannot do

any mistake. Basically, the accuracy of the program is depending on the

accuracy of program writing. So after a long period of time on program testing

and the debugging program becomes more accurate. The accuracy of a particular

program becomes more accurate Day by day.

Alertness Computer is a machine so it does not get

tired and hence can work for long time without creating any error or mistake.

It means that there is no different to perform one arithmetic operation 10

arithmetic operation or for thousand or millions of arithmetic operations the

result will be same because of there is no issue of tiredness like human

beings. The computer always works on full alert mode.

Versatility Modern era demands versatility from

everyone. In cricket, the all-rounder is more on the demand of the player. The

same player has also liked if the batsman is also a bowler; he is also a good

fielder. In the same way, people want to get more and more work from the same

machine. Nowadays we can perform any task with the help of a computer. we can

type, watch movies, listening songs, internet browse, download files, perform

many government-related official jobs using e-governance and so many other

useful tasks can be done using a single machine it is called versatility. A

computer is capable of performing almost any task provided that the task can be

reduced to a series of logical steps.

V.

A

computer is a digital device that takes data process it under some specific

program and produces some meaningful output.

VI.

Mainly

computer is a digital data problem solver or action performer. It takes data to

process it on the basis of some previously loaded program (set of instructions)

and produces the desired result. A computer can’t do anything that is not

pre-loaded into it.

VII.

Like

many other machines, a computer is also a system. Any system has three main

components input-process-output. As our human body, we listen from our ear

process it under our brain and when we find some result we can show the output

with the help of mouth (speak) or hand (writing). No system can work

independently. Like many other systems, computers are also a system, it takes

command, instructions, raw data, etc. with the help of specified input devices

and then processes it with a special device that is called a processor (processor

work on preloaded program) and then produces a result. It can be understood

with an example suppose that you want to find the result of 15 * 5 to do this

you can take the help of a computer. The computer takes input (15 * 5) then the

processor processes it on the basis of a table program that is preloaded and

gets the result i.e. 75. But how could you know the result so there are some

output devices which are used to show the result that is produced by the

processor? In very short we can say that a computer is a system for data

processing. A computer has a huge storage capacity. We can store millions of

libraries on a single computer. A

computer can store any amount of information because of its secondary storage.

Every piece of information can be stored as long as desired by the user and can

be recalled when required. A byte is a storage unit. Normally 1 TB (TeraByte)

hard disk is used in desktop. Later we will discuss these units in detail.

Artificial Intelligence (AI): Above characteristics belong to some

traditional computer systems nowadays modern computers come with AI. It

means that it can perform much better than previously loaded programs. An artificial

intelligence inbuilt computer can take its own decision. Google search is

based on artificial intelligence it produces the result of searching on

the basis of a particular user searching experience. This type of computer

can change the program it can modify the loaded program

Elements

of a Computer System set up:

There are

mainly five elements of a computer system.

(i) Hardware: the physical part of the computer

system that we can see, touch, and move from one place to another is called

hardware. For example mouse, keyboard, optical scanner, monitor, printer,

processor, etc.

(ii) Software: it is the part of the computer system that we cannot see but the

whole system is based on that part, we call it software. Software is mainly a

collection of programs. And the program is a set of instructions to solve any

given problem or to perform any particular job. All the work of the computer

depends on the program. The computer cannot do anything that is not already

written in the program, that is, what the computer can do. Everything is

already written in the program. Basically, there are two major classifications

of software, namely System Software and Application Software.

System software: software that is for a computer system. A computer system

consists of many parts so a different type of software is needed for each part.

In other, we can say that system software is for hardware. A user can not

directly interact with system software but without it, he cannot access the

services of particular software. If said in very simple words, then it can be

said that the system software acts as an intermediary between the hardware of

the computer and the user who uses that computer.

For example, if we want to use a printer, then without printer software

(sometimes it is called printer driver software), we cannot use it. With the

help of printer software, the computer performs various functions of the

printer. Sometimes it is also called device drivers. ROM -BIOS driver, printer driver, USB

drivers, motherboard drivers, VGA drivers are some examples of system software.

Sometimes the operating system is also considered as system software.

Application software: Application software is the software that is made for a

particular work. It is written for a particular application. It is also called

end-user software. End-user means that the user interacts with. A computer user

directly interacts with it .every application software is written for a

particular work for example word processor software is made for only word

processing, paintbrush software is made only for painting type of work, the

internet browser is used for browsing the internet, etc.

Ms-office, ms-paint, Tux-paint, notepad, adobe reader, Mozilla Firefox,

Google Chrome, calculator are some very common examples of application

software.

(iii)Human being the most

important element of a computer system is its users.

The user's convenience is seen while designing the interface of any

application software. We cannot imagine the computer world without human

beings. The computer is made by humans and it is made for humans only. Humans

cannot be separated from a computer element. Data analysis, computer

programmer, server administrator, and

computer operators are some important examples of this.

(iv) Data:

data is also a very important element of a computer system. Basically, a

computer is a data processor so without data, a computer cannot perform

anything. Any raw fact is called data and after processing this data becomes

information. Suppose we have to find 799 whether it is an odd or even number,

then we will call 799 as data, and the rule with which we will find out whether

it is even or odd is called a program. And the result that will come out will

be output. So both the result and the program depend on our data, so data is

mandatory for computers. The same data can present many types of results; hence

the demand for data analysis has also increased nowadays. Text, audio, images,

and video are some most common forms of data. With the help of appropriate

software word processing, image processing, audio processing, and video

processing can be performed to get desired output.

(v) Network setup it is not

possible to imagine computers without the internet nowadays. Internet is a

network of networks. it means that the internet is dependent on various kinds

of networks. Most of the networks are belong to the Telecom network for example

BSNL, Airtel,jio, etc. So it is very important for the Internet to have this

kind of network.

Components

of a Computer System

There are basically three main components of a computer

system. Input unit, Process unit, and Output unit. Our computer system is based

on these three main components. If we talk about any element of the computer,

then it will be related to any one of these three components. To understand the

working of the computer, it is very important to understand how these three

components are related, and to understand this; we can take the help of a block

diagram.

Input unit: The main function of this unit is to take data,

commands or instructions. To receive data from the user or any other means

input devices are used. Mouse, keyboard, joystick, scanner, are some most

popular examples of input devices.

Process unit of C.P.U.(central

processing unit ): this is the core unit of a computer system. It is also

called the brain of the computer. Basically, the main task of the computer is

done by this unit. Because of this unit computer is called a data processor. In

a very simple word, we can say that it is not a part of a computer but it is a

computer. The job of this unit is very complicated so it consists of three

parts CPU, memory, and storage.

CPU (central processing unit): it

is a combination of ALU (arithmetic and Logical unit) and CU (control unit).ALU

performs arithmetic operation such as addition; subtraction etc. and CU

perform control operation of the computer system.

Memory: it is a helper of the CPU. As you know we

cannot do anything without memory in the same way CPU also needed memory to

store temporary data in the meanwhile of processing. It is used from taking

data from input devices to show the result. Everywhere temporary memory is

required. To do this RAM (Random Access Memory) is used along with the CPU.

There is also a special type of memory is used that is call ROM (Read Only

Memory).it holds data permanently. It is very costly so only the data that is

required for opening the computer is stored in it. So it can be said that there

are two types of computer memory RAM and ROM. always remember only RAM is

called computer main memory. It is also called primary storage devices.

Difference between RAM and ROM

| Difference | RAM | ROM |

|---|

| Data retention | RAM is a volatile memory that could store the data as long as the power is supplied. | ROM is a non-volatile memory that could retain the data even when power is turned off. |

| Working type | Data stored in RAM can be retrieved and altered. | Data stored in ROM can only be read. |

| Use | Used to store the data that has to be currently processed by CPU temporarily. | It stores the instructions required during bootstrap ( start )of the computer. |

| Speed | It is a high-speed memory. | It is much slower than the RAM. |

| CPU Interaction | The CPU can access the data stored on it. | The CPU can not access the data stored on it unless the data is stored in RAM. |

| Size and Capacity | Large size with higher capacity. | Small size with less capacity. |

| Used as/in | CPU Cache, Primary memory. | Firmware, Micro-controllers |

| Accessibility | The data stored is easily accessible | The data stored is not as easily accessible as in RAM |

| Cost | Costlier | cheaper than RAM. |

Storage: There is some difference between storage and memory.

In general computer, term memory refers to temporary whereas storage means

permanent storage. Mainly secondary storage devices are used for users, not for

computers. This means that secondary storage devices are not used in the data

processing. The main purposes of these devices are to keep user data or system

produced useful information for a long period of time. Examples of these

devices are hard disk, CD, DVD, pen drive, memory card, etc. it is cheaper than

primary storage ROM. generally, it is used to store a huge amount of data. A

normal HDD can store 1/2 terabytes of data.

Output units generally user interact with the input units and

output units. After performing very complex data processing job processor

produce a result but it is in electronic form human being cannot understand it.

So to convert results into human-readable form output devices are used. The

main function of the output unit is to convert digital data into a

human-understandable form. Monitor and printer are the two most common output

devices. Monitor display the result in a soft form on-screen and the printer

produce the result on paper in a hard form so sometimes soft copy and hard copy

terms are used for monitor and printer respectively. Speaker is also an output

device that is used to produce audio for listening.

Computer Generations

We

can divide the generation of computers into five stages. The sequence of

computer generation is as follows.

First Generation (1940-1956)

Vacuum tubes or thermionic

valve machines are used in first-generation computers.

Punched card and the paper

tape were used as an input device.

For output printouts were

used.

ENIAC (Electronic Numerical

Integrator and Computer) was the first electronic computer is introduced in

this generation.

Second Generation (1956-1963)

Transistor technologies were used in this

generation in place of the vacuum tubes.

Second-generation

computers have become much smaller than the first generation.

Computation

speed of second-generation computers was much faster than the first generation so it takes lesser time to produce

results.

Third Generation (1963-1971)

Third

generation of computers is based on Integrated Circuit (IC) technology.

Third

generation computers became much smaller in size than the first and second

generation, and their computation power increased dramatically.

The third

generation computer needs less power and also generated less heat.

The

maintenance cost of the computers in the third generation was also low.

Commercialization

of computers was also started in this generation.

Fourth Generation (1972-2010)

The

invention of microprocessor technology laid the foundation for the fourth

generation computer.

Fourth

generation computers not only became very small in size, but their ability to calculate

also increased greatly and at the same time they became portable, that is, it became very easy to move them from one place to another.

The

computers of fourth-generation started generating a very low amount of heat.

It is

much faster and accuracy became more reliable because of microprocessor technology.

Their

prices have also come down considerably.

Commercialization

of computers has become very fast and it is very easily available for common

people.

Fifth Generation (2010- till date)

AI

(Artificial intelligence) is the backbone technology of this generation of

computers. AI-enabled computers or programs behave like an intelligent person

that’s why this technology is called artificial intelligence technology.

In addition to intelligence, the speed of

computers has also increased significantly and the size has also reduced much

earlier than even the computer on the palm has been used.

Some of

the other popular advanced technologies of the fifth generation include Quantum

computation, Nanotechnology, Parallel processing, Big Data, and IoT, etc.

computer language

A computer cannot understand our language because it is a

machine. So, it understands machine language or we can say that the main

language of computers is machine language. Now the question arises that which

language a machine understands? The answer is very easy; it understands the

language of on and off.

But due to the complexity of the work of computers nowadays,

it is not easy to work with the computer only in the language of on and off, so

some other languages are used for the computer.

Therefore, the language of computers is mainly divided into

three parts.

1. Machine language: - Machine language is the language in which only 0

and 1 two digits are used. Any digital device only understands 0 and 1.It is

the primary language of a computer that the computer understands directly, the

number system which has only two digits is called a binary number system so we

can say that the computer can understand only binary codes. Binary codes have

only two digits 0 and 1 since the computer only understands the binary signal

i.e. 0 and 1 and the computer's circuit i.e. the circuit recognizes these

binary codes and converts it into electrical signals. In this, 0 means Low /off

and 1 means High/ On.

2. Assembly Language: - We use symbols in assembly language because machine

language is difficult for humans, so assembly language was used to make

communication with the computer easier. That is why it is also called symbol

language. Sometimes it is also called low-level language. But one thing must be

understood that the computer understands only and only the language of the

machine. So the computer needs a special type of program, called assembler, to

understand the assembly language. The assembler converts programs written in

assembly language into machine language so that the program written by us can

be understood by the computer. Assembly language is the second generation of

programming language.

3. High-Level

Language: - Symbols were used in assembly language, so it was difficult to

write a program with only symbols, so the need was felt for a language that

uses the alphabet of ordinary English or we can say it that we can understand

and write easily. Writing and understanding high-level language is much easier

than assembly language, so it is quite popular in the computer world with the

help of which it became very easy to write many programs. As the assembler was

used to convert assembly language to machine language, a special type of

software called compiler is used to convert high-level language to machine

language. Some of the major high-level programming languages are C, C ++, JAVA,

HTML, PASCAL, Ruby, etc.

Compiler, Interpreter, Assembler.

A compiler, interpreter, and assembler are three different types of software programs used in the process of programming and software development.

Compiler:

A compiler is a software program that converts the source code written in a high-level programming language into machine code, which can be executed directly by a computer's CPU. It is used to create standalone executable files that can be run on a specific platform. The compiler takes the entire source code as input, performs a series of checks and optimizations, and then generates the executable code.

Interpreter:

An interpreter is a software program that executes the source code line by line. Instead of generating machine code, it translates the source code into an intermediate code, which is then executed by the interpreter. This type of program is often used in scripting languages, where code is interpreted at runtime. An interpreter is slower than a compiler because it needs to read and interpret each line of code each time the program is run.

Assembler:

An assembler is a software program that converts assembly language into machine code. Assembly language is a low-level programming language that uses mnemonic codes to represent instructions that can be executed directly by a computer's CPU. Assemblers are used to create executable files and libraries that can be linked with other code. Unlike compilers and interpreters, assemblers work directly with machine code, making them very efficient but also very difficult to use.

In summary, compilers, interpreters, and assemblers are all used to translate human-readable code into machine-executable code, but they do it in different ways and for different purposes.

ALGORITHM

An algorithm is a finite sequence of well-defined

instructions, typically used to solve a class of specific problems or to

perform a computation.

Characteristics

of an Algorithm

Not all procedures can be called an algorithm.

An algorithm should have the following characteristics −

·

Unambiguous − Algorithm should be clear and

unambiguous. Each of its steps (or phases), and their inputs/outputs should be

clear and must lead to only one meaning.

·

Input − An algorithm should have 0 or more

well-defined inputs.

·

Output − An algorithm should have 1 or more

well-defined outputs, and should match the desired output.

·

Finiteness − Algorithms must terminate after a finite

number of steps.

·

Feasibility − Should be feasible with the available

resources.

·

Independent − An algorithm should have step-by-step

directions, which should be independent of any programming code.

Algorithm example :

Check whether a number is prime or not

Step

1: Start

Step

2: Declare variables n, i, flag.

Step

3: Initialize variables

flag ← 1

i ← 2

Step

4: Read n from the user.

Step

5: Repeat the steps until i=(n/2)

5.1 If remainder of n÷i equals 0

flag ← 0

Go to step 6

5.2 i ← i+1

Step

6: If flag = 0

Display n is not prime

else

Display n is prime

Step

7: Stop

Limitations of algorithm

Limited by the input: An algorithm is limited by the input data it receives. If the input is incorrect or incomplete, the algorithm may not be able to produce the desired output.

Limited by the complexity of the problem: Some problems are so complex that no algorithm can solve them efficiently. This is known as the computational complexity of the problem.

Limited by the computational resources: Algorithms require computational resources such as memory and processing power. If the resources are limited, the algorithm may not be able to solve the problem efficiently.

Limited by the accuracy of the data: Algorithms rely on accurate data to produce correct results. If the data is inaccurate or contains errors, the algorithm may produce incorrect results.

Limited by the assumptions made: Algorithms are often based on assumptions about the data or the problem being solved. If the assumptions are incorrect, the algorithm may produce incorrect results.

Limited by the time constraint: Some problems require a solution within a specific time frame. If the algorithm cannot produce a solution within the time limit, it may not be useful.

Limited by the programmer's ability: The effectiveness of an algorithm is limited by the skill and experience of the programmer who created it. A poorly designed algorithm may not produce the desired results, even if the problem is well-defined.

Flow chart

A flowchart is a

type of diagram that represents a workflow or process. A flowchart can also be defined as a

diagrammatic representation of an algorithm, a step-by-step approach to solving a task.

Example

Pseudo

code:

In computer science, pseudocode is a plain language

description of the steps in an algorithm or another system. Pseudocode often

uses structural conventions of a normal programming language, but is intended

for human reading rather than machine reading. It typically omits details that

are essential for machine understanding of the algorithm, such as variable

declarations and language-specific code.

Flag= 1, i=2

Read n

Repeat until i=(n/2)

If remainder of

n÷i equals 0

flag = 0

i =i+1

end if

end repeat

If flag = 0

Display n

is not prime

else

Display n

is prime

End if

////

Conditions in pseudo code

Pseudo code is a simple language used to express the logic of a computer program algorithm. It is not a real programming language, but it is used to describe the steps in an algorithm using English-like statements. Here are some common conditions used in pseudo code:

IF/THEN: This is used to check a condition and execute a set of instructions if the condition is true.

Example:

IF x > 0 THEN

PRINT "x is positive"

END IF

IF/THEN/ELSE: This is used to check a condition and execute one set of instructions if the condition is true, and another set of instructions if the condition is false.

Example:

IF x > 0 THEN

PRINT "x is positive"

ELSE

PRINT "x is negative or zero"

END IF

WHILE: This is used to execute a set of instructions repeatedly while a condition is true.

Example:

WHILE x > 0 DO

PRINT x

x = x - 1

END WHILE

FOR: This is used to execute a set of instructions a specified number of times.

Example:

FOR i = 1 TO 10 DO

PRINT i

END FOR

SWITCH/CASE: This is used to select one of several sets of instructions to execute based on the value of a variable.

Example:

SWITCH grade

CASE "A"

PRINT "Excellent"

CASE "B"

PRINT "Good"

CASE "C"

PRINT "Fair"

CASE "D"

PRINT "Poor"

CASE ELSE

PRINT "Invalid grade"

END SWITCH

Pseudo code loop

Loops in pseudo code

In pseudo code, loops are used to execute a block of code repeatedly until a certain condition is met. There are different types of loops, such as "for" loops, "while" loops, and "do-while" loops, which can be used depending on the specific use case. Here are some examples of how loops can be expressed in pseudo code:

For loop:

css

Copy code

for i = 1 to 10

// code to be executed

end for

This loop will execute the code inside the loop body 10 times, with the value of i starting at 1 and incrementing by 1 each time through the loop.

While loop:

vbnet

Copy code

while condition

// code to be executed

end while

This loop will execute the code inside the loop body repeatedly as long as the condition is true.

Do-while loop:

javascript

Copy code

do

// code to be executed

while condition

This loop will execute the code inside the loop body at least once, and then repeatedly as long as the condition is true.

Nested loops:

for i = 1 to 10

for j = 1 to 5

// code to be executed

end for

end for

This is an example of a nested loop, where one loop is inside another. In this case, the code inside the inner loop will be executed 5 times for each iteration of the outer loop, resulting in a total of 50 executions of the inner loop code.

UNIT II

----------

What is an Operating System?

An Operating

System (OS) is a software that acts as an interface between computer

hardware components and the user. Every computer system must have at least one

operating system to run other programs. Applications like Browsers, MS Office,

Notepad Games, etc., need some environment to run and perform its tasks.

The

OS helps you to communicate with the computer without knowing how to speak the

computer's language. It is not possible for the user to use any computer or

mobile device without having an operating system.

History Of OS

- Operating systems were first

developed in the late 1950s to manage tape storage

- The General Motors Research Lab

implemented the first OS in the early 1950s for their IBM 701

- In the mid-1960s, operating

systems started to use disks

- In the late 1960s, the first

version of the Unix OS was developed

- The first OS built by Microsoft

was DOS. It was built in 1981 by purchasing the 86-DOS software from a

Seattle company

- The present-day popular OS

Windows first came to existence in 1985 when a GUI was created and paired

with MS-DOS.

Types of Operating System (OS)

Following

are the popular types of Operating System:

- Batch Operating System

- Multitasking/Time Sharing OS

- Multiprocessing OS

- Real Time OS

- Distributed OS

- Network OS

- Mobile OS

Batch Operating System

Some computer processes

are very lengthy and time-consuming. To speed the same process, a job with a

similar type of needs are batched together and run as a group.

The user of a batch

operating system never directly interacts with the computer. In this type of

OS, every user prepares his or her job on an offline device like a punch card

and submit it to the computer operator.

Multi-Tasking/Time-sharing

Operating systems

Time-sharing operating

system enables people located at a different terminal(shell) to use a single

computer system at the same time. The processor time (CPU) which is shared

among multiple users is termed as time sharing.

Real time OS

A real time operating

system time interval to process and respond to inputs is very small. Examples:

Military Software Systems, Space Software Systems are the Real time OS example.

Distributed Operating

System

Distributed systems use

many processors located in different machines to provide very fast computation

to its users.

Network Operating System

Network Operating

System runs on a server. It provides the capability to serve to manage data,

user, groups, security, application, and other networking functions.

Mobile OS

Mobile operating

systems are those OS which is especially that are designed to power

smartphones, tablets, and wearables devices.

Some most famous mobile

operating systems are Android and iOS, but others include BlackBerry, Web, and

watchOS.

Functions of Operating System

Below

are the main functions of Operating System:

- Process management:- Process management helps OS to create and delete

processes. It also provides mechanisms for synchronization and

communication among processes.

- Memory management:- Memory management module performs the task of

allocation and de-allocation of memory space to programs in need of this

resources.

- File management:- It manages all the file-related activities such as

organization storage, retrieval, naming, sharing, and protection of files.

- Device Management: Device management keeps tracks of all devices. This

module also responsible for this task is known as the I/O controller. It

also performs the task of allocation and de-allocation of the devices.

- I/O System Management: One of the main objects of any OS is to hide the

peculiarities of that hardware devices from the user.

- Secondary-Storage Management: Systems have several levels of storage which includes

primary storage, secondary storage, and cache storage. Instructions and

data must be stored in primary storage or cache so that a running program

can reference it.

- Security:- Security module protects the data and information of

a computer system against malware threat and authorized access.

- Command interpretation: This module is interpreting commands given by the and

acting system resources to process that commands.

- Networking: A distributed system is a group of processors

which do not share memory, hardware devices, or a clock. The processors

communicate with one another through the network.

- Job accounting: Keeping track of time & resource used by various

job and users.

- Communication management: Coordination and assignment of compilers,

interpreters, and another software resource of the various users of the

computer systems.

Features of Operating System (OS)

Here

is a list important features of OS:

- Protected and supervisor mode

- Allows disk access and file

systems Device drivers Networking Security

- Program Execution

- Memory management Virtual

Memory Multitasking

- Handling I/O operations

- Manipulation of the file system

- Error Detection and handling

- Resource allocation

- Information and Resource

Protection

////

Elements of command based and GUI based operating system

Command-based operating systems (CLI):

Command Line Interface (CLI): Command-based operating systems use a command line interface, which is a text-based interface that allows users to enter commands to perform tasks.

Shell: The shell is a program that provides the interface between the user and the operating system. It interprets the commands entered by the user and executes them.

Command interpreter: The command interpreter is a program that interprets the commands entered by the user and converts them into machine language that the computer can understand.

Command prompt: The command prompt is a text-based prompt that indicates that the operating system is ready to accept commands from the user.

GUI-based operating systems:

Graphical User Interface (GUI): GUI-based operating systems use a graphical user interface, which is a visual interface that allows users to interact with the operating system using graphical elements such as icons, windows, and menus.

Desktop: The desktop is the graphical interface that is displayed when the user logs into the operating system. It provides a visual representation of the computer's file system and allows users to launch applications and access files.

Window Manager: The window manager is a program that manages the display of windows on the desktop. It allows users to move and resize windows and switch between different applications.

Icons: Icons are graphical representations of applications or files that allow users to launch applications and access files by clicking on them.

Menus: Menus are graphical elements that allow users to access various functions of the operating system and applications by selecting them from a list of options.

Computer Network

A computer network is a group of computers linked to each other that

enables the computer to communicate with another computer and share their

resources, data, and applications.

A computer network can be categorized by their size. A computer

network is mainly of four types:

- LAN(Local

Area Network)

- MAN(Metropolitan

Area Network)

- WAN(Wide

Area Network)

LAN(Local Area Network)

- Local

Area Network is a group of computers connected to each other in a small

area such as building, office.

- LAN is

used for connecting two or more personal computers through a communication

medium such as twisted pair, coaxial cable, etc.

- It is

less costly as it is built with inexpensive hardware such as hubs, network

adapters, and ethernet cables.

- The data

is transferred at an extremely faster rate in Local Area Network.

- Local

Area Network provides higher security.

MAN(Metropolitan Area

Network)

- A

metropolitan area network is a network that covers a larger geographic

area by interconnecting a different LAN to form a larger network.

- Government

agencies use MAN to connect to the citizens and private industries.

- In MAN,

various LANs are connected to each other through a telephone exchange

line.

- The most

widely used protocols in MAN are RS-232, Frame Relay, ATM, ISDN, OC-3,

ADSL, etc.

- It has a

higher range than Local Area Network(LAN).

Uses Of Metropolitan

Area Network:

- MAN is

used in communication between the banks in a city.

- It can be

used in an Airline Reservation.

- It can be

used in a college within a city.

- It can

also be used for communication in the military.

WAN(Wide Area Network)

- A Wide

Area Network is a network that extends over a large geographical area such

as states or countries.

- A Wide

Area Network is quite bigger network than the LAN.

- A Wide

Area Network is not limited to a single location, but it spans over a

large geographical area through a telephone line, fibre optic cable or

satellite links.

- The

internet is one of the biggest WAN in the world.

- A Wide

Area Network is widely used in the field of Business, government, and

education.

Examples Of Wide Area

Network:

- Mobile Broadband: A 4G network is widely used across a

region or country.

- Last mile: A telecom company is used to provide the

internet services to the customers in hundreds of cities by connecting

their home with fiber.

- Private network: A bank provides a private network that

connects the 44 offices. This network is made by using the telephone

leased line provided by the telecom company.

Advantages Of Wide Area

Network:

Following are the advantages of the Wide Area Network:

- Geographical area: A Wide Area Network provides a large

geographical area. Suppose if the branch of our office is in a different

city then we can connect with them through WAN. The internet provides a

leased line through which we can connect with another branch.

- Centralized data: In case of WAN network, data is

centralized. Therefore, we do not need to buy the emails, files or back up

servers.

- Get updated files: Software companies work on the live

server. Therefore, the programmers get the updated files within seconds.

- Exchange messages: In a WAN network, messages are

transmitted fast. The web application like Facebook, Whatsapp, Skype

allows you to communicate with friends.

- Sharing of software and resources: In WAN network, we can share the software

and other resources like a hard drive, RAM.

- Global business: We can do the business over the internet

globally.

- High bandwidth: If we use the leased lines for our

company then this gives the high bandwidth. The high bandwidth increases

the data transfer rate which in turn increases the productivity of our

company.

Disadvantages of Wide

Area Network:

The following are the disadvantages of the Wide Area Network:

- Security issue: A WAN network has more security issues as

compared to LAN and MAN network as all the technologies are combined

together that creates the security problem.

- Needs Firewall & antivirus

software: The

data is transferred on the internet which can be changed or hacked by the

hackers, so the firewall needs to be used. Some people can inject the

virus in our system so antivirus is needed to protect from such a virus.

- High Setup cost: An installation cost of the WAN network

is high as it involves the purchasing of routers, switches.

- Troubleshooting problems: It covers a large area so fixing the

problem is difficult.

Internetwork

- An

internetwork is defined as two or more computer network LANs or WAN or

computer network segments are connected using devices, and they are

configured by a local addressing scheme. This process is known as internetworking.

- An

interconnection between public, private, commercial, industrial, or

government computer networks can also be defined as internetworking.

- An

internetworking uses the internet protocol.

- The

reference model used for internetworking is Open System

Interconnection(OSI).

Types of Internetwork:

1. Extranet: An extranet is a communication network

based on the internet protocol such as Transmission Control protocol and internet

protocol. It is used for information sharing. The access to the extranet is

restricted to only those users who have login credentials. An extranet is the

lowest level of internetworking. It can be categorized as MAN, WAN or

other computer networks. An extranet cannot have a single LAN,

atleast it must have one connection to the external network.

2. Intranet: An intranet is a private network based on

the internet protocol such as Transmission Control protocol and internet

protocol. An intranet belongs to an organization which is only accessible

by the organization's employee or members. The main aim of the

intranet is to share the information and resources among the organization

employees. An intranet provides the facility to work in groups and for

teleconferences.

Intranet advantages:

- Communication: It provides a cheap and easy

communication. An employee of the organization can communicate with

another employee through email, chat.

- Time-saving: Information on the intranet is shared in

real time, so it is time-saving.

- Collaboration: Collaboration is one of the most

important advantage of the intranet. The information is distributed among

the employees of the organization and can only be accessed by the

authorized user.

- Platform independency: It is a neutral architecture as the

computer can be connected to another device with different architecture.

- Cost effective: People can see the data and documents by

using the browser and distributes the duplicate copies over the intranet.

This leads to a reduction in the cost.

Primary

Network Topologies

The way in which devices are interconnected to form a network is

called network topology. Some of the factors that affect choice of topology for

a network are −

- Cost−

Installation cost is a very important factor in overall cost of setting up

an infrastructure. So cable lengths, distance between nodes, location of

servers, etc. have to be considered when designing a network.

- Flexibility−

Topology of a network should be flexible enough to allow reconfiguration

of office set up, addition of new nodes and relocation of existing nodes.

- Reliability−

Network should be designed in such a way that it has minimum down time.

Failure of one node or a segment of cabling should not render the whole

network useless.

- Scalability−

Network topology should be scalable, i.e. it can accommodate load of new

devices and nodes without perceptible drop in performance.

- Ease of

installation− Network should be easy to install in terms of hardware,

software and technical personnel requirements.

- Ease of

maintenance− Troubleshooting and maintenance of network should be easy.

Topology defines the structure of

the network of how all the components are interconnected to each other. There

are two types of topology: physical and logical topology.

Physical topology is the geometric representation of all the nodes in a

network.

Bus Topology

- The bus

topology is designed in such a way that all the stations are connected

through a single cable known as a backbone cable.

- Each node

is either connected to the backbone cable by drop cable or directly

connected to the backbone cable.

- When a

node wants to send a message over the network, it puts a message over the

network. All the stations available in the network will receive the message

whether it has been addressed or not.

- The bus

topology is mainly used in 802.3 (ethernet) and 802.4 standard networks.

- The

configuration of a bus topology is quite simpler as compared to other

topologies.

- The

backbone cable is considered as a "single lane" through

which the message is broadcast to all the stations.

- The most

common access method of the bus topologies is CSMA (Carrier

Sense Multiple Access).

CSMA: It is a media access control used to control

the data flow so that data integrity is maintained, i.e., the packets do not

get lost. There are two alternative ways of handling the problems that occur

when two nodes send the messages simultaneously.

- CSMA CD: CSMA CD (Collision detection) is

an access method used to detect the collision. Once the collision is

detected, the sender will stop transmitting the data. Therefore, it works

on "recovery after the collision".

- CSMA CA: CSMA CA (Collision Avoidance) is

an access method used to avoid the collision by checking whether the

transmission media is busy or not. If busy, then the sender waits until

the media becomes idle. This technique effectively reduces the possibility

of the collision. It does not work on "recovery after the

collision".

Advantages of Bus

topology:

- Low-cost cable: In bus topology, nodes are directly

connected to the cable without passing through a hub. Therefore, the

initial cost of installation is low.

- Moderate data speeds: Coaxial or twisted pair cables are mainly

used in bus-based networks that support upto 10 Mbps.

- Familiar technology: Bus topology is a familiar technology as

the installation and troubleshooting techniques are well known, and

hardware components are easily available.

- Limited failure: A failure in one node will not have any

effect on other nodes.

Disadvantages of Bus

topology:

- Extensive cabling: A bus topology is quite simpler, but

still it requires a lot of cabling.

- Difficult troubleshooting: It requires specialized test equipment to

determine the cable faults. If any fault occurs in the cable, then it

would disrupt the communication for all the nodes.

- Signal interference: If two nodes send the messages

simultaneously, then the signals of both the nodes collide with each

other.

- Reconfiguration difficult: Adding new devices to the network would

slow down the network.

- Attenuation: Attenuation is a loss of signal leads to

communication issues. Repeaters are used to regenerate the signal.

Ring Topology

- Ring

topology is like a bus topology, but with connected ends.

- The node

that receives the message from the previous computer will re-transmit to

the next node.

- The data

flows in one direction, i.e., it is unidirectional.

- The data

flows in a single loop continuously known as an endless loop.

- It has no

terminated ends, i.e., each node is connected to another node and has no

termination point.

- The data

in a ring topology flow in a clockwise direction.

- The most

common access method of the ring topology is token passing.

- Token passing: It is a network access method in which

token is passed from one node to another node.

- Token: It is a frame that circulates around the

network.

Working of Token

passing

- A token

moves around the network, and it is passed from computer to computer until

it reaches the destination.

- The

sender modifies the token by putting the address along with the data.

- The data

is passed from one device to another device until the destination address

matches. Once the token received by the destination device, then it sends

the acknowledgment to the sender.

- In a ring

topology, a token is used as a carrier.

Advantages of Ring

topology:

- Network Management: Faulty devices can be removed from the

network without bringing the network down.

- Product availability: Many hardware and software tools for

network operation and monitoring are available.

- Cost: Twisted pair cabling is inexpensive and

easily available. Therefore, the installation cost is very low.

- Reliable: It is a more reliable network because the

communication system is not dependent on the single host computer.

Disadvantages of Ring

topology:

- Difficult troubleshooting: It requires specialized test equipment to

determine the cable faults. If any fault occurs in the cable, then it

would disrupt the communication for all the nodes.

- Failure: The breakdown in one station leads to the

failure of the overall network.

- Reconfiguration difficult: Adding new devices to the network would

slow down the network.

- Delay: Communication delay is directly

proportional to the number of nodes. Adding new devices increases the

communication delay.

Star Topology

- Star

topology is an arrangement of the network in which every node is connected

to the central hub, switch or a central computer.

- The

central computer is known as a server, and the peripheral

devices attached to the server are known as clients.

- Coaxial

cable or RJ-45 cables are used to connect the computers.

- Hubs or

Switches are mainly used as connection devices in a physical star

topology.

- Star

topology is the most popular topology in network implementation.

Advantages of Star

topology

- Efficient troubleshooting: Troubleshooting is quite efficient in a

star topology as compared to bus topology. In a bus topology, the manager

has to inspect the kilometers of cable. In a star topology, all the

stations are connected to the centralized network. Therefore, the network

administrator has to go to the single station to troubleshoot the problem.

- Network control: Complex network control features can be

easily implemented in the star topology. Any changes made in the star

topology are automatically accommodated.

- Limited failure: As each station is connected to the

central hub with its own cable, therefore failure in one cable will not

affect the entire network.

- Familiar technology: Star topology is a familiar technology as

its tools are cost-effective.

- Easily expandable: It is easily expandable as new stations

can be added to the open ports on the hub.

- Cost effective: Star topology networks are cost-effective

as it uses inexpensive coaxial cable.

- High data speeds: It supports a bandwidth of approx

100Mbps. Ethernet 100BaseT is one of the most popular Star topology

networks.

Disadvantages of Star

topology

- A Central point of failure: If the central hub or switch goes down,

then all the connected nodes will not be able to communicate with each

other.

- Cable: Sometimes cable routing becomes difficult

when a significant amount of routing is required.

Tree topology

- Tree

topology combines the characteristics of bus topology and star topology.

- A tree

topology is a type of structure in which all the computers are connected

with each other in hierarchical fashion.

- The

top-most node in tree topology is known as a root node, and all other

nodes are the descendants of the root node.

- There is

only one path exists between two nodes for the data transmission. Thus, it

forms a parent-child hierarchy.

Advantages of Tree

topology

- Support for broadband transmission: Tree topology is mainly used to provide

broadband transmission, i.e., signals are sent over long distances without

being attenuated.

- Easily expandable: We can add the new device to the existing

network. Therefore, we can say that tree topology is easily expandable.

- Easily manageable: In tree topology, the whole network is

divided into segments known as star networks which can be easily managed

and maintained.

- Error detection: Error detection and error correction are

very easy in a tree topology.

- Limited failure: The breakdown in one station does not

affect the entire network.

- Point-to-point wiring: It has point-to-point wiring for

individual segments.

Disadvantages of Tree

topology

- Difficult troubleshooting: If any fault occurs in the node, then it

becomes difficult to troubleshoot the problem.

- High cost: Devices required for broadband

transmission are very costly.

- Failure: A tree topology mainly relies on main bus

cable and failure in main bus cable will damage the overall network.

- Reconfiguration difficult: If new devices are added, then it becomes

difficult to reconfigure.

Mesh topology

- Mesh

technology is an arrangement of the network in which computers are

interconnected with each other through various redundant connections.

- There are

multiple paths from one computer to another computer.

- It does

not contain the switch, hub or any central computer which acts as a

central point of communication.

- The

Internet is an example of the mesh topology.

- Mesh

topology is mainly used for WAN implementations where communication

failures are a critical concern.

- Mesh

topology is mainly used for wireless networks.

Mesh topology is

divided into two categories:

- Fully

connected mesh topology

- Partially

connected mesh topology

- Full Mesh Topology: In a full mesh topology, each computer is

connected to all the computers available in the network.

- Partial Mesh Topology: In a partial mesh topology, not all but

certain computers are connected to those computers with which they

communicate frequently.

Advantages of Mesh topology:

Reliable: The mesh topology networks are very reliable

as if any link breakdown will not affect the communication between connected

computers.

Fast Communication: Communication is very fast between the nodes.

Easier Reconfiguration: Adding new devices would not disrupt the

communication between other devices.

Disadvantages of Mesh

topology

- Cost: A mesh topology contains a large number

of connected devices such as a router and more transmission media than

other topologies.

- Management: Mesh topology networks are very large and

very difficult to maintain and manage. If the network is not monitored

carefully, then the communication link failure goes undetected.

- Efficiency: In this topology, redundant connections

are high that reduces the efficiency of the network.

What are network devices?

Network devices, or networking hardware, are physical devices that are required for communication and interaction between hardware on a computer network.

Types of network devices

Here is the common network device list:

Hub

Hubs connect multiple

computer networking devices together. A hub also acts as a repeater in that it

amplifies signals that deteriorate after traveling long distances over

connecting cables. A hub is the simplest in the family of network connecting

devices because it connects LAN components with identical protocols.

A hub can be used with

both digital and analog data, provided its settings have been configured to

prepare for the formatting of the incoming data. For example, if the incoming

data is in digital format, the hub must pass it on as packets; however, if the

incoming data is analog, then the hub passes it on in signal form.

Hubs do not perform

packet filtering or addressing functions; they just send data packets to all

connected devices. Hubs operate at the Physical layer of the Open Systems Interconnection (OSI) model.

There are two types of hubs: simple and multiple port.

Switch

Switches generally

have a more intelligent role than hubs. A switch is a multiport device that

improves network efficiency. The switch maintains limited routing information

about nodes in the internal network, and it allows connections to systems like

hubs or routers. Strands of LANs are usually connected using switches.

Generally, switches can read the hardware addresses of incoming packets to

transmit them to the appropriate destination.

Using switches

improves network efficiency over hubs or routers because of the virtual circuit

capability. Switches also improve network security because the virtual circuits

are more difficult to examine with network monitors. You can think of a switch

as a device that has some of the best capabilities of routers and hubs

combined. A switch can work at either the Data Link layer or the Network layer

of the OSI model. A multilayer switch is one that can operate at both layers,

which means that it can operate as both a switch and a router. A multilayer

switch is a high-performance device that supports the same routing protocols as

routers.

Switches can be

subject to distributed denial of service (DDoS) attacks; flood guards are used

to prevent malicious traffic from bringing the switch to a halt. Switch port

security is important so be sure to secure switches: Disable all unused ports

and use DHCP snooping, ARP inspection and MAC address filtering.

Router

Routers help transmit

packets to their destinations by charting a path through the sea of

interconnected networking devices using different network topologies. Routers

are intelligent devices, and they store information about the networks they’re

connected to. Most routers can be configured to operate as packet-filtering

firewalls and use access control lists (ACLs). Routers, in conjunction with a

channel service unit/data service unit (CSU/DSU), are also used to translate

from LAN framing to WAN framing. This is needed because LANs and WANs use

different network protocols. Such routers are known as border routers. They

serve as the outside connection of a LAN to a WAN, and they operate at the border

of your network.

Router are also used

to divide internal networks into two or more subnetworks. Routers can also be

connected internally to other routers, creating zones that operate

independently. Routers establish communication by maintaining tables about

destinations and local connections. A router contains information about the

systems connected to it and where to send requests if the destination isn’t

known. Routers usually communicate routing and other information using one of

three standard protocols: Routing Information Protocol (RIP), Border Gateway

Protocol (BGP) or Open Shortest Path First (OSPF).

Routers are your first

line of defense, and they must be configured to pass only traffic that is

authorized by network administrators. The routes themselves can be configured

as static or dynamic. If they are static, they can only be configured manually

and stay that way until changed. If they are dynamic, they learn of other

routers around them and use information about those routers to build their routing

tables.

Routers are

general-purpose devices that interconnect two or more heterogeneous networks.

They are usually dedicated to special-purpose computers, with separate input

and output network interfaces for each connected network. Because routers and

gateways are the backbone of large computer networks like the internet, they

have special features that give them the flexibility and the ability to cope

with varying network addressing schemes and frame sizes through segmentation of

big packets into smaller sizes that fit the new network components. Each router

interface has its own Address Resolution Protocol (ARP) module, its own LAN

address (network card address) and its own Internet Protocol (IP) address. The

router, with the help of a routing table, has knowledge of routes a packet

could take from its source to its destination. The routing table, like in the

bridge and switch, grows dynamically. Upon receipt of a packet, the router

removes the packet headers and trailers and analyzes the IP header by determining

the source and destination addresses and data type, and noting the arrival

time. It also updates the router table with new addresses not already in the

table. The IP header and arrival time information is entered in the routing

table. Routers normally work at the Network layer of the OSI model.

Bridge

Bridges are used to

connect two or more hosts or network segments together. The basic role of

bridges in network architecture is storing and forwarding frames between the

different segments that the bridge connects. They use hardware Media Access

Control (MAC) addresses for transferring frames. By looking at the MAC address

of the devices connected to each segment, bridges can forward the data or block

it from crossing. Bridges can also be used to connect two physical LANs into a

larger logical LAN.

Bridges work only at

the Physical and Data Link layers of the OSI model. Bridges are used to divide

larger networks into smaller sections by sitting between two physical network

segments and managing the flow of data between the two.

Bridges are like hubs

in many respects, including the fact that they connect LAN components with

identical protocols. However, bridges filter incoming data packets, known as

frames, for addresses before they are forwarded. As it filters the data

packets, the bridge makes no modifications to the format or content of the

incoming data. The bridge filters and forwards frames on the network with the

help of a dynamic bridge table. The bridge table, which is initially empty,

maintains the LAN addresses for each computer in the LAN and the addresses of

each bridge interface that connects the LAN to other LANs. Bridges, like hubs,

can be either simple or multiple port.

Bridges have mostly

fallen out of favor in recent years and have been replaced by switches, which

offer more functionality. In fact, switches are sometimes referred to as

“multiport bridges” because of how they operate.

Gateway

Gateways normally work

at the Transport and Session layers of the OSI model. At the Transport layer

and above, there are numerous protocols and standards from different vendors;

gateways are used to deal with them. Gateways provide translation between

networking technologies such as Open System Interconnection (OSI) and

Transmission Control Protocol/Internet Protocol (TCP/IP). Because of this,

gateways connect two or more autonomous networks, each with its own routing

algorithms, protocols, topology, domain name service, and network

administration procedures and policies.

Gateways perform all

of the functions of routers and more. In fact, a router with added translation

functionality is a gateway. The function that does the translation between

different network technologies is called a protocol converter.

Modem

Modems

(modulators-demodulators) are used to transmit digital signals over analog

telephone lines. Thus, digital signals are converted by the modem into analog

signals of different frequencies and transmitted to a modem at the receiving

location. The receiving modem performs the reverse transformation and provides

a digital output to a device connected to a modem, usually a computer. The

digital data is usually transferred to or from the modem over a serial line

through an industry standard interface, RS-232. Many telephone companies offer

DSL services, and many cable operators use modems as end terminals for

identification and recognition of home and personal users. Modems work on both

the Physical and Data Link layers.

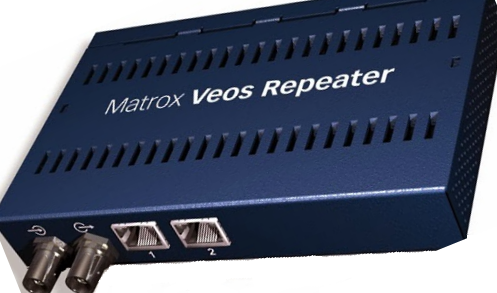

Repeater

A repeater is an

electronic device that amplifies the signal it receives. You can think of repeater

as a device which receives a signal and retransmits it at a higher level or

higher power so that the signal can cover longer distances, more than 100

meters for standard LAN cables. Repeaters work on the Physical layer.

Access Point

While an access point

(AP) can technically involve either a wired or wireless connection, it commonly

means a wireless device. An AP works at the second OSI layer, the Data Link

layer, and it can operate either as a bridge connecting a standard wired

network to wireless devices or as a router passing data transmissions from one

access point to another.

Wireless access points

(WAPs) consist of a transmitter and receiver (transceiver) device used to

create a wireless LAN (WLAN). Access points typically are separate network devices

with a built-in antenna, transmitter and adapter. APs use the wireless

infrastructure network mode to provide a connection point between WLANs and a

wired Ethernet LAN. They also have several ports, giving you a way to expand

the network to support additional clients. Depending on the size of the

network, one or more APs might be required to provide full coverage. Additional

APs are used to allow access to more wireless clients and to expand the range

of the wireless network. Each AP is limited by its transmission range — the

distance a client can be from an AP and still obtain a usable signal and data

process speed. The actual distance depends on the wireless standard, the

obstructions and environmental conditions between the client and the AP. Higher

end APs have high-powered antennas, enabling them to extend how far the

wireless signal can travel.

APs might also provide

many ports that can be used to increase the network’s size, firewall

capabilities and Dynamic Host Configuration Protocol (DHCP) service. Therefore,

we get APs that are a switch, DHCP server, router and firewall.

To connect to a

wireless AP, you need a service set identifier (SSID) name. 802.11 wireless

networks use the SSID to identify all systems belonging to the same network,

and client stations must be configured with the SSID to be authenticated to the

AP. The AP might broadcast the SSID, allowing all wireless clients in the area

to see the AP’s SSID. However, for security reasons, APs can be configured not

to broadcast the SSID, which means that an administrator needs to give client

systems the SSID instead of allowing it to be discovered automatically.

Wireless devices ship with default SSIDs, security settings, channels,

passwords and usernames. For security reasons, it is strongly recommended that

you change these default settings as soon as possible because many internet

sites list the default settings used by manufacturers.

Access points can be

fat or thin. Fat APs, sometimes still referred to as autonomous APs, need to be

manually configured with network and security settings; then they are

essentially left alone to serve clients until they can no longer function. Thin

APs allow remote configuration using a controller. Since thin clients do not

need to be manually configured, they can be easily reconfigured and monitored.

Access points can also be controller-based or stand-alone.

UNIT III

----------

The Internet is the global system of interconnected

computer networks that uses the Internet protocol suite (TCP/IP) to communicate

between networks and devices. It is a network of networks that consists of

private, public, academic, business, and government networks of local to global

scope, linked by a broad array of electronic, wireless, and optical networking

technologies. The Internet carries a vast range of information resources and

services, such as the inter-linked hypertext documents and applications of the

World Wide Web (WWW), electronic mail, telephony, and file sharing.

Internet architecture

Functioning of internet

The internet is a global network of connected computers and servers that allows users to access and share information and resources from anywhere in the world. The basic functioning of the internet involves several interconnected layers of hardware and software that work together to transmit data between devices.

Here is a simplified overview of how the internet works:

Devices: The internet is accessed through various devices such as computers, smartphones, tablets, and servers that are connected to the network.

Protocols: The internet uses a set of standardized protocols, including TCP/IP (Transmission Control Protocol/Internet Protocol), to transmit and receive data packets between devices.

ISP: Internet Service Providers (ISPs) provide users with access to the internet by connecting their devices to the network via wired or wireless connections.

DNS: Domain Name System (DNS) servers translate human-readable domain names (such as www.google.com) into IP addresses (such as 172.217.5.78) that computers can understand.

Routing: When a user sends data over the internet, it is broken up into small packets and sent through a series of routers that determine the best path for the data to take to reach its destination.

Websites and servers: Websites and other online services are hosted on servers that are connected to the internet and provide users with access to content and resources.

Encryption: To ensure the security and privacy of data transmitted over the internet, encryption protocols such as SSL (Secure Sockets Layer) and TLS (Transport Layer Security) are used to encrypt data before it is transmitted and decrypt it when it is received.

Overall, the internet is a complex and constantly evolving network that requires the cooperation of many different devices and technologies to function effectively.

WWW

The World Wide Web

(WWW) is a network of online content that is formatted in HTML and accessed via

HTTP. The term refers to all the interlinked HTML pages that can be accessed

over the Internet. The World Wide Web was originally designed in 1991 by Tim

Berners-Lee while he was a contractor at CERN.

The World Wide Web is

most often referred to simply as “the Web.”

The World Wide Web is

what most people think of as the Internet. It is all the Web pages, pictures,

videos and other online content that can be accessed via a Web browser. The

Internet, in contrast, is the underlying network connection that allows us to

send email and access the World Wide Web. The early Web was a collection of

text-based sites hosted by organizations that were technically gifted enough to

set up a Web server and learn HTML. It has continued to evolve since the

original design, and it now includes interactive (social) media and user-generated

content that requires little to no technical skills.

We owe the free Web to

Berners-Lee and CERN’s decision to give away one of the greatest inventions of

the century.

FTP

File Transfer Protocol

(FTP) is a standard Internet protocol for transmitting files between computers

on the Internet over TCP/IP connections. FTP is a client-server protocol where

a client will ask for a file, and a local or remote server will provide it.

The end-users machine

is typically called the local host machine, which is connected via the internet

to the remote host—which is the second machine running the FTP software.

Anonymous FTP is a type

of FTP that allows users to access files and other data without needing an ID

or password. Some websites will allow visitors to use a guest ID or password-

anonymous FTP allows this.

Although a lot of file

transfer is now handled using HTTP, FTP is still commonly used to transfer

files “behind the scenes” for other applications — e.g., hidden behind the user

interfaces of banking, a service that helps build a website, such as Wix or

SquareSpace, or other services. It is also used, via Web browsers, to download

new applications.

How

FTP works

FTP is a client-server

protocol that relies on two communications channels between client and server:

a command channel for controlling the conversation and a data channel for

transmitting file content. Clients initiate conversations with servers by

requesting to download a file. Using FTP, a client can upload, download,