AKTU MCA I FCET NOTES UNIT V

Unit V

Emerging Technologies: Introduction, overview, features, limitations and application areas of Augmented Reality, Virtual Reality, Grid computing, Green computing, Big data analytics, Quantum Computing and Brain Computer Interface

UNIT V

----------

What is Big Data?

Big Data is a term used for a collection of data sets that are large and complex, which is difficult to store and process using available database management tools or traditional data processing applications. The challenge includes capturing, curating, storing, searching, sharing, transferring, analyzing and visualization of this data.

Big Data Characteristics

The five characteristics that define Big Data are: Volume, Velocity, Variety, Veracity and Value.

1. VOLUME

Volume refers to the ‘amount of data’, which is growing day by day at a very fast pace. The size of data generated by humans, machines and their interactions on social media itself is massive. Researchers have predicted that 40 Zettabytes (40,000 Exabytes) will be generated by 2020, which is an increase of 300 times from 2005.

2. VELOCITY

Velocity is defined as the pace at which different sources generate the data every day. This flow of data is massive and continuous. There are 1.03 billion Daily Active Users (Facebook DAU) on Mobile as of now, which is an increase of 22% year-over-year. This shows how fast the number of users are growing on social media and how fast the data is getting generated daily. If you are able to handle the velocity, you will be able to generate insights and take decisions based on real-time data.

3. VARIETY

As there are many sources which are contributing to Big Data, the type of data they are generating is different. It can be structured, semi-structured or unstructured. Hence, there is a variety of data which is getting generated every day. Earlier, we used to get the data from excel and databases, now the data are coming in the form of images, audios, videos, sensor data etc. as shown in below image. Hence, this variety of unstructured data creates problems in capturing, storage, mining and analyzing the data.

4. VERACITY

Veracity refers to the data in doubt or uncertainty of data available due to data inconsistency and incompleteness. In the image below, you can see that few values are missing in the table. Also, a few values are hard to accept, for example – 15000 minimum value in the 3rd row, it is not possible. This inconsistency and incompleteness is Veracity.

Data available can sometimes get messy and maybe difficult to trust. With many forms of big data, quality and accuracy are difficult to control like Twitter posts with hashtags, abbreviations, typos and colloquial speech. The volume is often the reason behind for the lack of quality and accuracy in the data.

· Due to uncertainty of data, 1 in 3 business leaders don’t trust the information they use to make decisions.

· It was found in a survey that 27% of respondents were unsure of how much of their data was inaccurate.

· Poor data quality costs the US economy around $3.1 trillion a year.

5. VALUE

After discussing Volume, Velocity, Variety and Veracity, there is another V that should be taken into account when looking at Big Data i.e. Value. It is all well and good to have access to big data but unless we can turn it into value it is useless. By turning it into value I mean, Is it adding to the benefits of the organizations who are analyzing big data? Is the organization working on Big Data achieving high ROI (Return On Investment)? Unless, it adds to their profits by working on Big Data, it is useless.

As discussed in Variety, there are different types of data which is getting generated every day. So, let us now understand the types of data:

Types of Big Data

Big Data could be of three types:

- Structured

- Semi-Structured

- Unstructured

- Structured

The data that can be stored and processed in a fixed format is called as Structured Data. Data stored in a relational database management system (RDBMS) is one example of ‘structured’ data. It is easy to process structured data as it has a fixed schema. Structured Query Language (SQL) is often used to manage such kind of Data.

- Semi-Structured

Semi-Structured Data is a type of data which does not have a formal structure of a data model, i.e. a table definition in a relational DBMS, but nevertheless it has some organizational properties like tags and other markers to separate semantic elements that makes it easier to analyze. XML files or JSON documents are examples of semi-structured data.

- Unstructured

The data which have unknown form and cannot be stored in RDBMS and cannot be analyzed unless it is transformed into a structured format is called as unstructured data. Text Files and multimedia contents like images, audios, videos are example of unstructured data. The unstructured data is growing quicker than others, experts say that 80 percent of the data in an organization are unstructured.

Till now, I have just covered the introduction of Big Data. Furthermore, this Big Data tutorial talks about examples, applications and challenges in Big Data.

Examples of Big Data

Daily we upload millions of bytes of data. 90 % of the world’s data has been created in last two years.

- Walmart handles more than 1 million customer transactions every hour.

- Facebook stores, accesses, and analyzes 30+ Petabytes of user generated data.

- 230+ millions of tweets are created every day.

- More than 5 billion people are calling, texting, tweeting and browsing on mobile phones worldwide.

- YouTube users upload 48 hours of new video every minute of the day.

- Amazon handles 15 million customer click stream user data per day to recommend products.

- 294 billion emails are sent every day. Services analyses this data to find the spams.

- Modern cars have close to 100 sensors which monitors fuel level, tire pressure etc. , each vehicle generates a lot of sensor data.

Applications of Big Data

We cannot talk about data without talking about the people, people who are getting benefited by Big Data applications. Almost all the industries today are leveraging Big Data applications in one or the other way.

- Smarter Healthcare: Making use of the petabytes of patient’s data, the organization can extract meaningful information and then build applications that can predict the patient’s deteriorating condition in advance.

- Telecom: Telecom sectors collects information, analyzes it and provide solutions to different problems. By using Big Data applications, telecom companies have been able to significantly reduce data packet loss, which occurs when networks are overloaded, and thus, providing a seamless connection to their customers.

- Retail: Retail has some of the tightest margins, and is one of the greatest beneficiaries of big data. The beauty of using big data in retail is to understand consumer behavior. Amazon’s recommendation engine provides suggestion based on the browsing history of the consumer.

- Traffic control: Traffic congestion is a major challenge for many cities globally. Effective use of data and sensors will be key to managing traffic better as cities become increasingly densely populated.

- Manufacturing: Analyzing big data in the manufacturing industry can reduce component defects, improve product quality, increase efficiency, and save time and money.

- Search Quality: Every time we are extracting information from google, we are simultaneously generating data for it. Google stores this data and uses it to improve its search quality.

Someone has rightly said: “Not everything in the garden is Rosy!”. Till now in this Big Data tutorial, I have just shown you the rosy picture of Big Data. But if it was so easy to leverage Big data, don’t you think all the organizations would invest in it? Let me tell you upfront, that is not the case. There are several challenges which come along when you are working with Big Data.

Now that you are familiar with Big Data and its various features, the next section of this blog on Big Data Tutorial will shed some light on some of the major challenges faced by Big Data.

Challenges with Big Data

Let me tell you few challenges which come along with Big Data:

- Data Quality – The problem here is the 4th V i.e. Veracity. The data here is very messy, inconsistent and incomplete. Dirty data cost $600 billion to the companies every year in the United States.

- Discovery – Finding insights on Big Data is like finding a needle in a haystack. Analyzing petabytes of data using extremely powerful algorithms to find patterns and insights are very difficult.

- Storage – The more data an organization has, the more complex the problems of managing it can become. The question that arises here is “Where to store it?”. We need a storage system which can easily scale up or down on-demand.

- Analytics – In the case of Big Data, most of the time we are unaware of the kind of data we are dealing with, so analyzing that data is even more difficult.

- Security – Since the data is huge in size, keeping it secure is another challenge. It includes user authentication, restricting access based on a user, recording data access histories, proper use of data encryption etc.

- Lack of Talent – There are a lot of Big Data projects in major organizations, but a sophisticated team of developers, data scientists and analysts who also have sufficient amount of domain knowledge is still a challenge.

Augmented Reality

What Is Augmented Reality?

Augmented reality (AR) is an enhanced version of the real physical world that is achieved through the use of digital visual elements, sound, or other sensory stimuli delivered via technology. It is a growing trend among companies involved in mobile computing and business applications in particular.

Amid the rise of data collection and analysis, one of augmented reality’s primary goals is to highlight specific features of the physical world, increase understanding of those features, and derive smart and accessible insight that can be applied to real-world applications. Such big data can help inform companies' decision-making and gain insight into consumer spending habits, among others.

KEY TAKEAWAYS

- Augmented reality (AR) involves overlaying visual, auditory, or other sensory information onto the world in order to enhance one's experience.

- Retailers and other companies can use augmented reality to promote products or services, launch novel marketing campaigns, and collect unique user data.

- Unlike virtual reality, which creates its own cyber environment, augmented reality adds to the existing world as it is.

Understanding Augmented Reality

Augmented reality continues to develop and become more pervasive among a wide range of applications. Since its conception, marketers and technology firms have had to battle the perception that augmented reality is little more than a marketing tool. However, there is evidence that consumers are beginning to derive tangible benefits from this functionality and expect it as part of their purchasing process.

For example, some early adopters in the retail sector have developed technologies that are designed to enhance the consumer shopping experience. By incorporating augmented reality into catalog apps, stores let consumers visualize how different products would look like in different environments. For furniture, shoppers point the camera at the appropriate room and the product appears in the foreground.

Elsewhere, augmented reality’s benefits could extend to the healthcare sector, where it could play a much bigger role. One way would be through apps that enable users to see highly detailed, 3D images of different body systems when they hover their mobile device over a target image. For example, augmented reality could be a powerful learning tool for medical professionals throughout their training.

Some experts have long speculated that wearable devices could be a breakthrough for augmented reality. Whereas smartphones and tablets show a tiny portion of the user’s landscape, smart eyewear, for example, may provide a more complete link between real and virtual realms if it develops enough to become mainstream.

Categories of AR Apps and Examples

a) Augmented Reality in 3D viewers:

AUGMENT

Sun-Seeker

b) Augmented Reality in Browsers:

ARGON4

AR Browser SDK

c) Augmented Reality Games:

Pokémon Go

REAL STRIKE

d) Augmented Reality GPS:

AR GPS Drive/Walk Navigation

AR GPS Compass Map 3D

What Is Virtual Reality?

Virtual reality (VR) refers to a computer-generated simulation in which a person can interact within an artificial three-dimensional environment using electronic devices, such as special goggles with a screen or gloves fitted with sensors. In this simulated artificial environment, the user is able to have a realistic-feeling experience.

Augmented reality (AR) is different from VR, in that AR enhances the real world as it exists with graphical overlays and does not create a fully immersive experience

KEY TAKEAWAYS

- Virtual reality (VR) creates an immersive artificial world that can seem quite real, via the use of technology.

- Through a virtual reality viewer, users can look up, down, or any which way, as if they were actually there.

- Virtual reality has many use-cases, including entertainment and gaming, or acting as a sales, educational, or training tool.

Understanding Virtual Reality

· The concept of virtual reality is built on the natural combination of two words: the virtual and the real. The former means "nearly" or "conceptually," which leads to an experience that is near-reality through the use of technology. Software creates and serves up virtual worlds that are experienced by users who wear hardware devices such as goggles, headphones, and special gloves. Together, the user can view and interact with the virtual world as if from within.

· To understand virtual reality, let's draw a parallel with real-world observations. We understand our surroundings through our senses and the perception mechanisms of our body. Senses include taste, touch, smell, sight, and hearing, as well as spatial awareness and balance. The inputs gathered by these senses are processed by our brains to make interpretations of the objective environment around us. Virtual reality attempts to create an illusory environment that can be presented to our senses with artificial information, making our minds believe it is (almost) a reality.

Augmented Reality vs. Virtual Reality

Augmented reality uses the existing real-world environment and puts virtual information on top of it to enhance the experience.

In contrast, virtual reality immerses users, allowing them to "inhabit" an entirely different environment altogether, notably a virtual one created and rendered by computers. Users may be immersed in an animated scene or an actual location that has been photographed and embedded in a virtual reality app. Through a virtual reality viewer, users can look up, down, or any which way, as if they were actually there.

Grid Computing

Grid Computing can be defined as a network of computers working together to perform a task that would rather be difficult for a single machine. All machines on that network work under the same protocol to act as a virtual supercomputer. The task that they work on may include analyzing huge datasets or simulating situations that require high computing power. Computers on the network contribute resources like processing power and storage capacity to the network

Grid Computing is a subset of distributed computing, where a virtual supercomputer comprises machines on a network connected by some bus, mostly Ethernet or sometimes the Internet. It can also be seen as a form of Parallel Computing where instead of many CPU cores on a single machine, it contains multiple cores spread across various locations. The concept of grid computing isn’t new, but it is not yet perfected as there are no standard rules and protocols established and accepted by people.

Working:

A Grid computing network mainly consists of these three types of machines

1. Control Node:

A computer, usually a server or a group of servers administrates the whole network and keeps the account of the resources in the network pool.

2. Provider:

The computer contributes its resources to the network resource pool.

3. User:

The computer that uses the resources on the network.

When a computer makes a request for resources to the control node, the control node gives the user access to the resources available on the network. When it is not in use it should ideally contribute it’s resources to the network. Hence a normal computer on the node can swing in between being a user or a provider based on it’s needs. The nodes may consist of machines with similar platforms using same OS called homogenous networks, else machines with different platforms running on various different OS called heterogenous networks. This is the distinguishing part of grid computing from other distributed computing architectures.

For controlling the network and it’s resources a software/networking protocol is used generaly known as Middleware. This is responsible for administrating the network and the control nodes are merely it’s executors. As a grid computing system should use only unused resources of a computer, it is the job of the control node that any provider is not overloaded with tasks.

Another job of the middleware is to authorize any process that is being executed on the network. In a grid computing system, a provider gives permission to the user to run anything on it’s computer, hence it is a huge security threat for the network. Hence a middleware should ensure that there is no unwanted task being executed on the network.

Advantages of Grid Computing:

1. It is not centralized, as there are no servers required, except the control node which is just used for controlling and not for processing.

2. Multiple heterogenous machines i.e. machines with different Operating Systems can use a single grid computing network.

3. Tasks can be performed parallely accross various physical locations and the users don’t have to pay for it(with money).

Green computing

Green computing, also called green technology, is the environmentally responsible use of computers and related resources. Such practices include the implementation of energy-efficient central processing units (CPUs), servers and peripherals as well as reduced resource consumption and proper disposal of electronic waste (e-waste).

One of the earliest initiatives toward green computing in the United States was the voluntary labeling program known as Energy Star. It was conceived by the Environmental Protection Agency (EPA) in 1992 to promote energy efficiency in hardware of all kinds. The Energy Star label became a common sight, especially in notebook computers and displays. Similar programs have been adopted in Europe and Asia.

Government regulation, however well-intentioned, is only part of an overall green computing philosophy. The work habits of computer users and businesses can be modified to minimize adverse impact on the global environment. Here are some steps that can be taken:

· Power-down the CPU and all peripherals during extended periods of inactivity.

· Try to do computer-related tasks during contiguous, intensive blocks of time, leaving hardware off at other times.

· Power-up and power-down energy-intensive peripherals such as laser printers according to need.

· Use liquid-crystal-display (LCD) monitors rather than cathode-ray-tube (CRT) monitors.

· Use notebook computers rather than desktop computers whenever possible.

· Use the power-management features to turn off hard drives and displays after several minutes of inactivity.

· Minimize the use of paper and properly recycle waste paper.

· Dispose of e-waste according to federal, state, and local regulations.

· Employ alternative energy sources for computing workstations, servers, networks and data centers.

Quantum Computing

· Quantum computers are machines that use the properties of quantum physics to store data and perform computations. This can be extremely advantageous for certain tasks where they could vastly outperform even our best supercomputers.

· Classical computers, which include smartphones and laptops, encode information in binary “bits” that can either be 0s or 1s. In a quantum computer, the basic unit of memory is a quantum bit or qubit.

· Qubits are made using physical systems, such as the spin of an electron or the orientation of a photon. These systems can be in many different arrangements all at once, a property known as quantum superposition. Qubits can also be inextricably linked together using a phenomenon called quantum entanglement. The result is that a series of qubits can represent different things simultaneously.

· For instance, eight bits is enough for a classical computer to represent any number between 0 and 255. But eight qubits is enough for a quantum computer to represent every number between 0 and 255 at the same time. A few hundred entangled qubits would be enough to represent more numbers than there are atoms in the universe.

· This is where quantum computers get their edge over classical ones. In situations where there are a large number of possible combinations, quantum computers can consider them simultaneously. Examples include trying to find the prime factors of a very large number or the best route between two places.

· However, there may also be plenty of situations where classical computers will still outperform quantum ones. So the computers of the future may be a combination of both these types.

· For now, quantum computers are highly sensitive: heat, electromagnetic fields and collisions with air molecules can cause a qubit to lose its quantum properties. This process, known as quantum decoherence, causes the system to crash, and it happens more quickly the more particles that are involved.

· Quantum computers need to protect qubits from external interference, either by physically isolating them, keeping them cool or zapping them with carefully controlled pulses of energy. Additional qubits are needed to correct for errors that creep into the system.

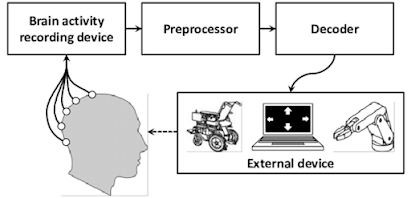

Brain-Computer Interface (BCI)

A Brain-Computer Interface (BCI) is a technology that allows a human to control a computer, peripheral or other electronic device with thought. • It does so by using electrodes to detect electric signals in the brain which are sent to a computer. • The computer then translates these electric signals into data which is used to control a computer or a device linked to a computer.

How the brain turns thoughts into action?

The brain is full of neurons; these neurons are connected to each other by axons and dendrites. • Your neurons - as you think about anything or do anything - are at work. • Your neurons connect with each other to form a super highway for nerve impulses to travel from neuron to neuron to produce thought, hearing, speech, or movement. • If you have an itch and you reach to scratch it; you received a stimulus (an itch) and reacted in response to the stimulus by scratching. • The electrical signals that generated the thought and action travel at a rate of about 250 feet per second or faster, in some cases.

Interface

The easiest and least invasive method is a set of electrodes -- a device known as an electroencephalograph (EEG) -- attached to the scalp. The electrodes can read brain signals. To get a higher-resolution signal, scientists can implant electrodes directly into the gray matter of the brain itself, or on the surface of the brain, beneath the skull.

Applications

• Provide disabled people with communication, environment control, and movement restoration.

• Provide enhanced control of devices such as wheelchairs, vehicles, or assistance robots for people with disabilities.

• Provide additional channel of control in computer games.

• Monitor attention in long-distance drivers or aircraft pilots, send out alert and warning for aircraft pilots.

• Develop intelligent relaxation devices.

Advantages of BCI

Eventually, this technology could:

• Allow paralyzed people to control prosthetic limbs with their mind.

• Transmit visual images to the mind of a blind person, allowing them to see.

• Transmit auditory data to the mind of a deaf person, allowing them to hear.

• Allow gamers to control video games with their minds.

• Allow a mute person to have their thoughts displayed and spoken by a computer

Disadvantages of BCI

• Research is still in beginning stages.

• The current technology is crude.

• Ethical issues may prevent its development.

• Electrodes outside of the skull can detect very few electric signals from the brain.

• Electrodes placed inside the skull create scar tissue in the brain.

Conclusion

As BCI technology further advances, brain tissue may one day give way to implanted silicon chips thereby creating a completely computerized simulation of the human brain that can be augmented at will. Futurists predict that from there, superhuman artificial intelligence won't be far behind.

0 Comments:

Post a Comment

Subscribe to Post Comments [Atom]

<< Home